Deployment & Installation > On-Prem Deployment & Installation

1. Getting Started with vuSmartMaps™

3. Console

5. Configuration

6. Data Management

9. Monitoring and Managing vuSmartMaps™

![]()

![]()

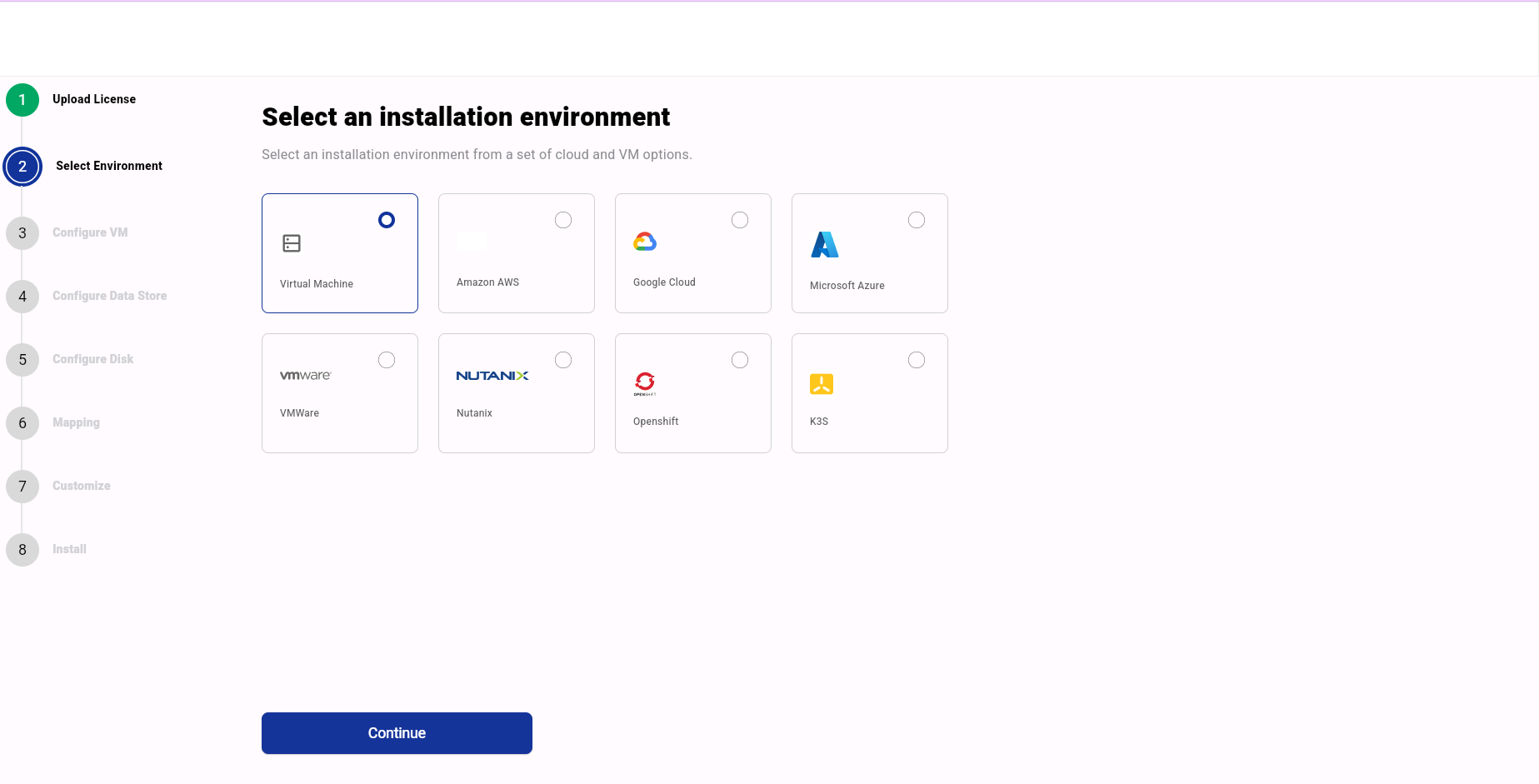

vuLauncher is a specialized application designed to facilitate the installation of vuSmartMaps™ within a Virtual Machine (VM) based environment. It offers a user-friendly graphical user interface (UI) through which users can input essential details about the target environment.

![]()

![]()

Before utilizing vuLauncher, ensure the following prerequisites are met:

![]()

💡Note: Provide user name/user group according to the environment.

![]()

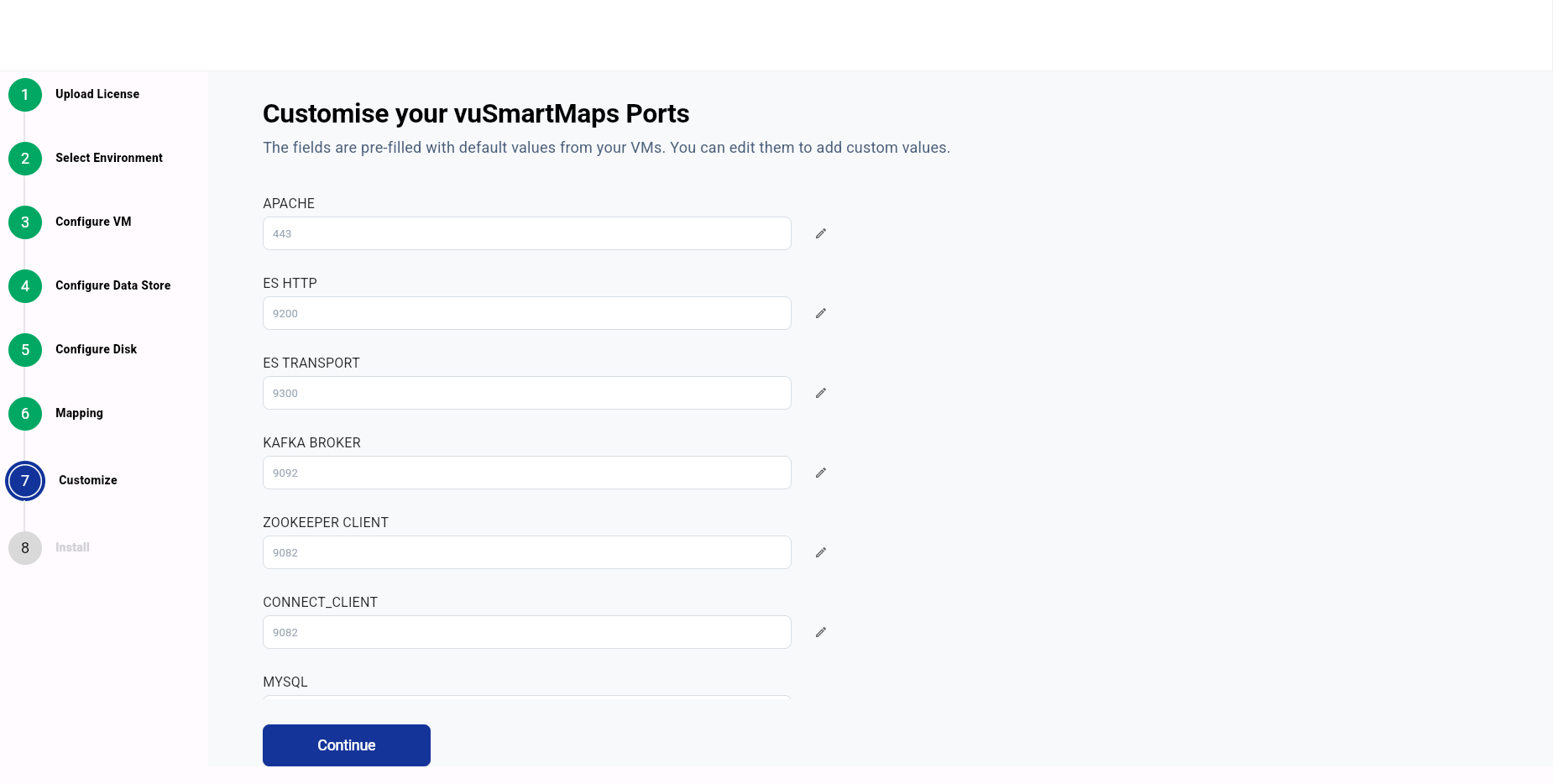

Ports Description

![]()

Before proceeding, ensure that the following ports are properly configured on your system:

![]()

SN | Port | Protocol | Description |

1 | 6443 | TCP | Orchestration API port. Ports should be open between all vuSmartMaps server and site-manager servers |

2 | 2379 | TCP | Orchestration key value DB port. Ports should be open between all vuSmartMaps server and site-manager servers |

3 | 2380 | TCP | Orchestration key value DB port. Ports should be open between all vuSmartMaps server and site-manager servers |

4 | 10250 | TCP | Orchestration service port. Ports should be open between all vuSmartMaps server and site-manager servers |

5 | 10259 | TCP | Orchestration service port. Ports should be open between all vuSmartMaps server and site-manager servers |

6 | 10257 | TCP | Orchestration service port. Ports should be open between all vuSmartMaps server and site-manager servers |

7 | 9200 | TCP | Time Series NoSQL database port. Ports should be open between all vuSmartMaps server and site-manager servers |

8 | 9300 | TCP | Time Series NoSQL database port. Ports should be open between all vuSmartMaps server and site-manager servers |

9 | 6379 | TCP | In-memory database port. Ports should be open between all vuSmartMaps server and site-manager servers |

10 | 9082 | TCP | Kafka API port. Ports should be open between all vuSmartMaps server and site-manager servers |

11 | 9092 | TCP | Kafka server port. Ports should be open between all vuSmartMaps server and site-manager servers |

12 | 2181 | TCP | Kafka server port. Ports should be open between all vuSmartMaps server and site-manager servers |

13 | 2888 | TCP | Kafka server port. Ports should be open between all vuSmartMaps server and site-manager servers |

14 | 3888 | TCP | Kafka server port. Ports should be open between all vuSmartMaps server and site-manager servers |

15 | 443 | TCP | UI port. Ports should be open between all vuSmartMaps server and site-manager servers. Also it should be accessible from desktop |

16 | 8080 | TCP | Installer service port. Ports should be open between all vuSmartMaps server and site-manager servers. Also it should be accessible from desktop |

17 | 5432 | TCP | Time Series SQL database. Ports should be open between all vuSmartMaps server and site-manager servers |

18 | 22 | TCP | SSH port. Ports should be open between all vuSmartMaps server and site-manager servers |

19 | 30910,30901 | TCP | Object storage service port. Ports should be open between all vuSmartMaps server and site-manager servers. Also it should be accessible from desktop |

20 | 13000 | TCP | Webhook port. Ports should be open between all vuSmartMaps server and site-manager servers |

21 | 8123 | TCP | HyperScale database port. Ports should be open between all vuSmartMaps server and site-manager servers |

22 | 9000 | TCP | HyperScale database port. Ports should be open between all vuSmartMaps server and site-manager servers |

23 | 8472 | UDP | vxLan port. Ports should be open between all vuSmartMaps server and site-manager servers |

![]()

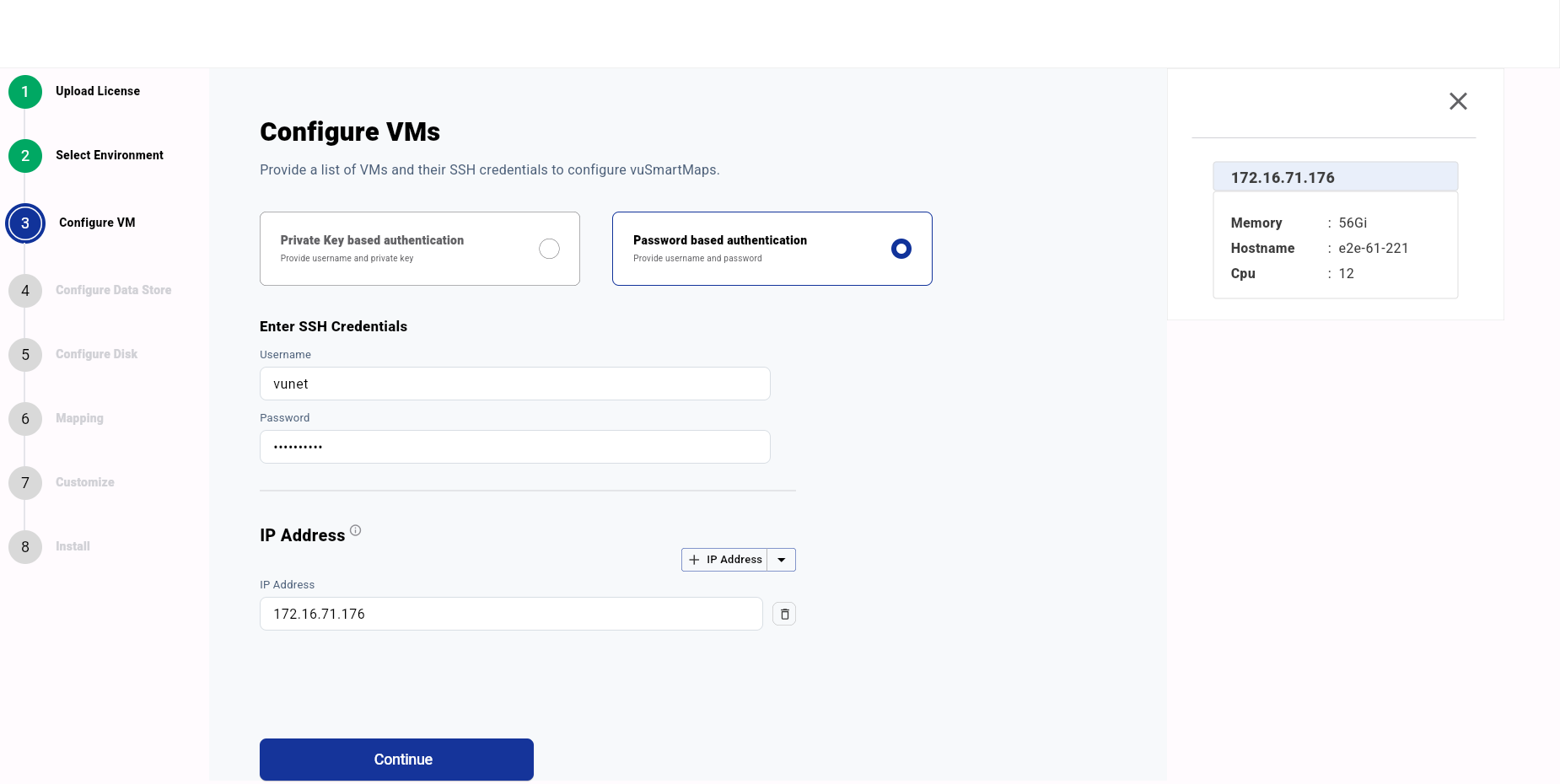

7. Ensure uniform credentials across all VMs i.e. same password or private key across all VMs

![]()

💡Note: Apart from Traefik, other services can’t switch to other ports if there is a conflict with default ports. So, for this release, the default service ports will be used.

![]()

![]()

![]()

💡Note: If you don’t have access to the download server, download the binaries directly from this URL

Please check with [email protected] for getting the Username and Password for getting the credentials for Download server

![]()

![]()

![]()

![]()

![]()

In the case of vuSmartMaps’ multi-node cluster installation, it is recommended to have a separate node apart from all vuSmartMaps nodes where we will run vuLauncher and vuSiteManager.

![]()

A dedicated VM for SiteManager with a minimum configuration of 200GB disk space, 6 cores, and 64GB RAM. This VM must have connectivity to all other nodes where vuSmartMaps will be installed. The OS of the VM should be linux.

License configuration will be different for multi node installation, please check with [email protected] for getting the license according to the installation environment.

![]()

💡Note: The installation steps provided for single-node installation are applicable for the multi-node cluster installations of vuSmartMaps.

![]()

![]()

![]()

![]()

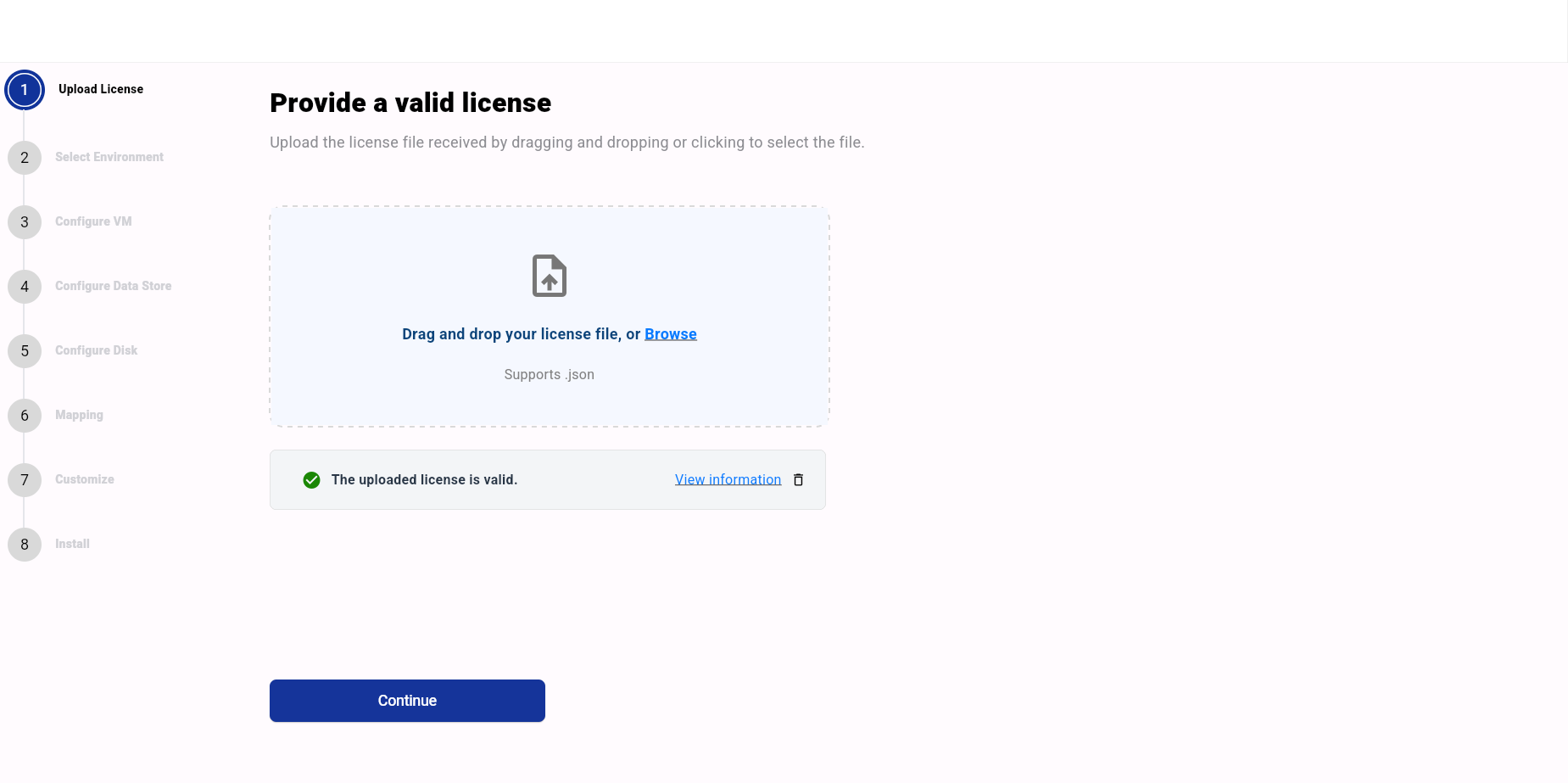

💡Note: Please get the updated license file from [email protected]

Also, mention the kind of setup (single node/multi node) you’re doing for the deployment.

![]()

![]()

![]()

![]()

![]()

![]()

💡Note: The VM Credentials would be shared, along with the VM details.

![]()

![]()

![]()

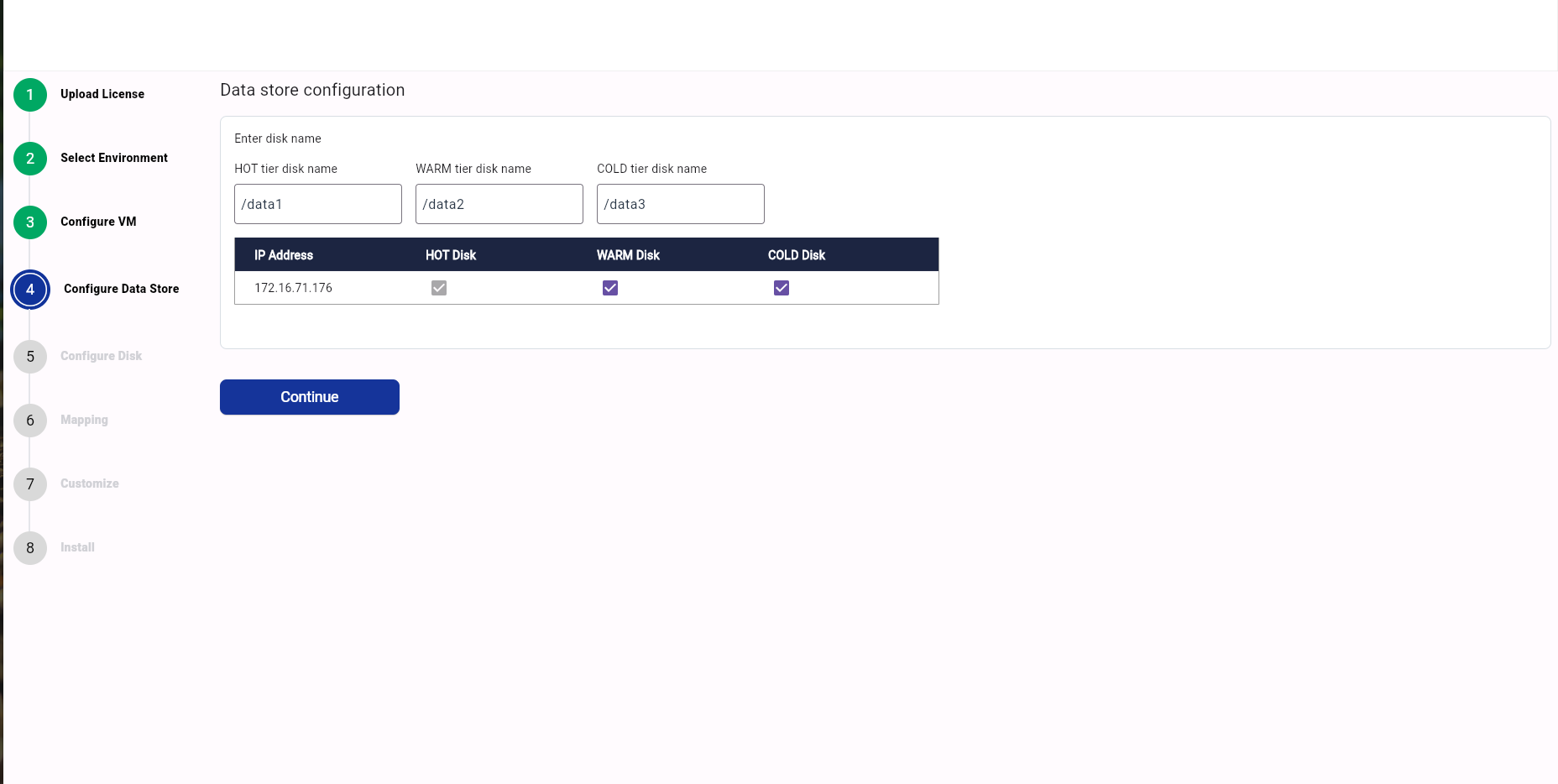

For Hyperscale data tier configuration, we have below options:

💡Note: HOT Disk should always be selected.

![]()

![]()

![]()

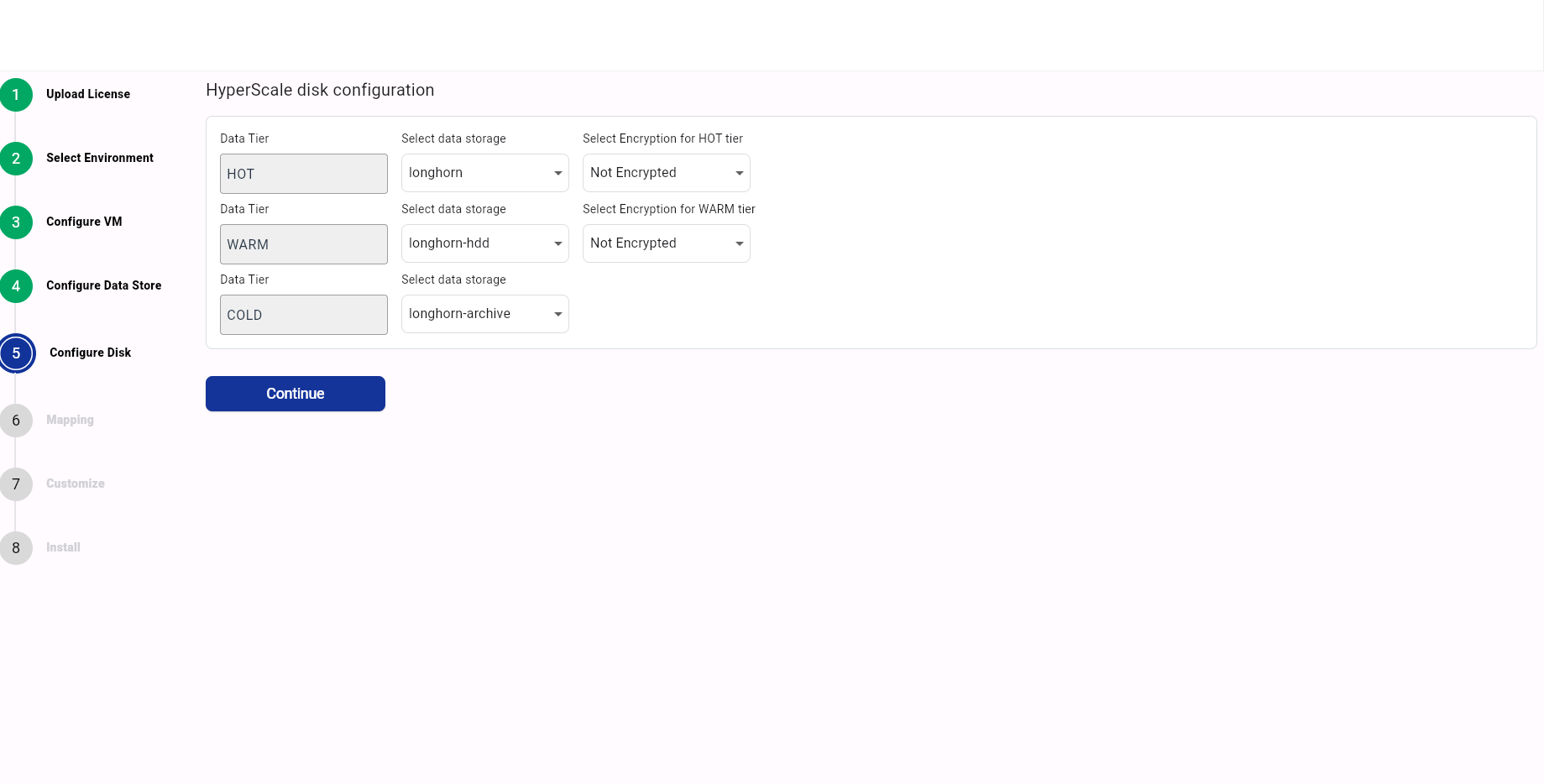

Based on the previous step, 3 storage classes will be configured for each type of storage. Accordingly we need to assign the storage class and encryption setting for each disk.

![]()

💡Note: As of now we are not supporting encryption. So, select the Not Encrypted option here.

![]()

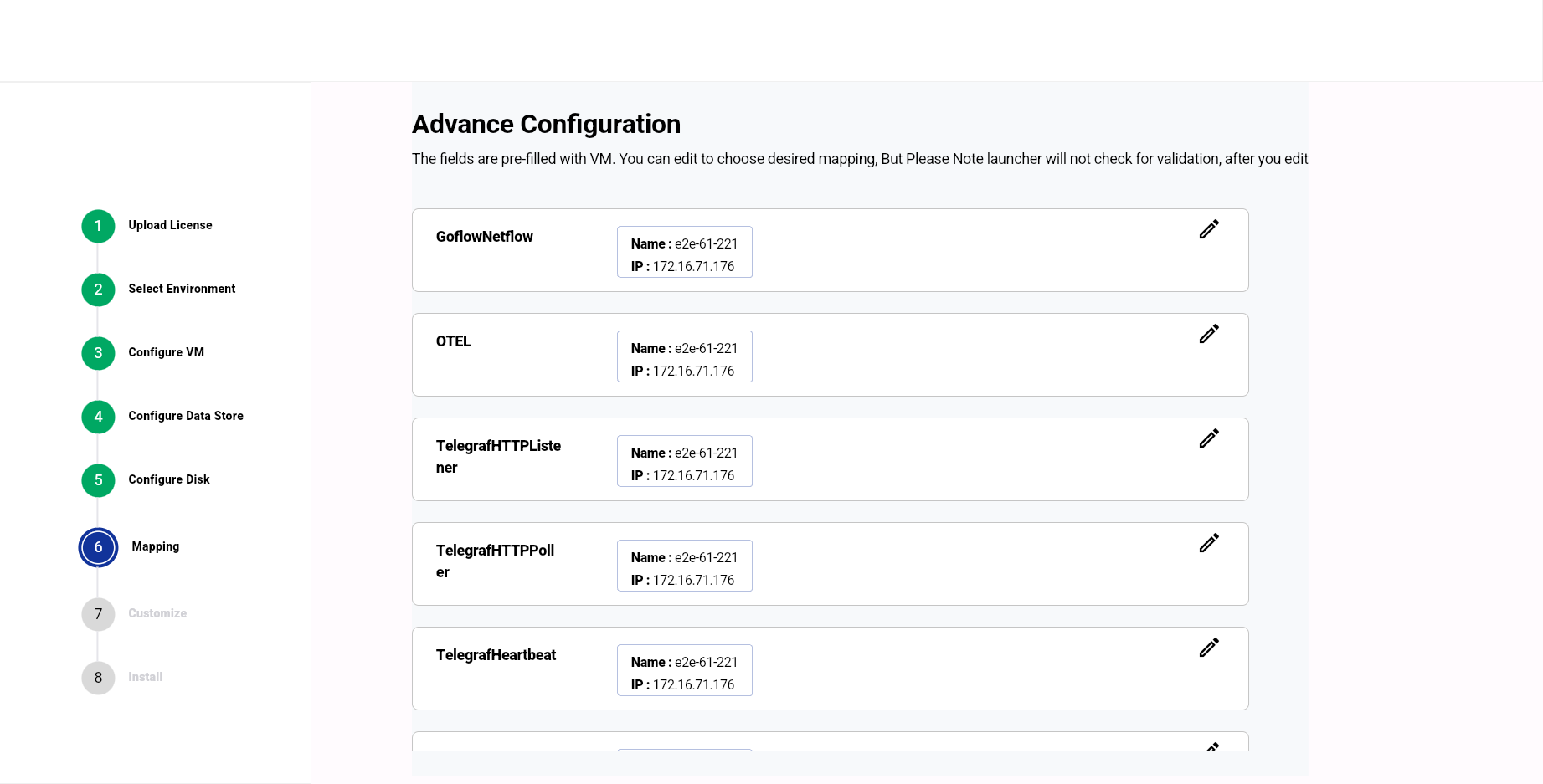

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

💡Note: Once you start the deployment, you cannot edit the configuration you provided.

![]()

![]()

![]()

![]()

![]()

![]()

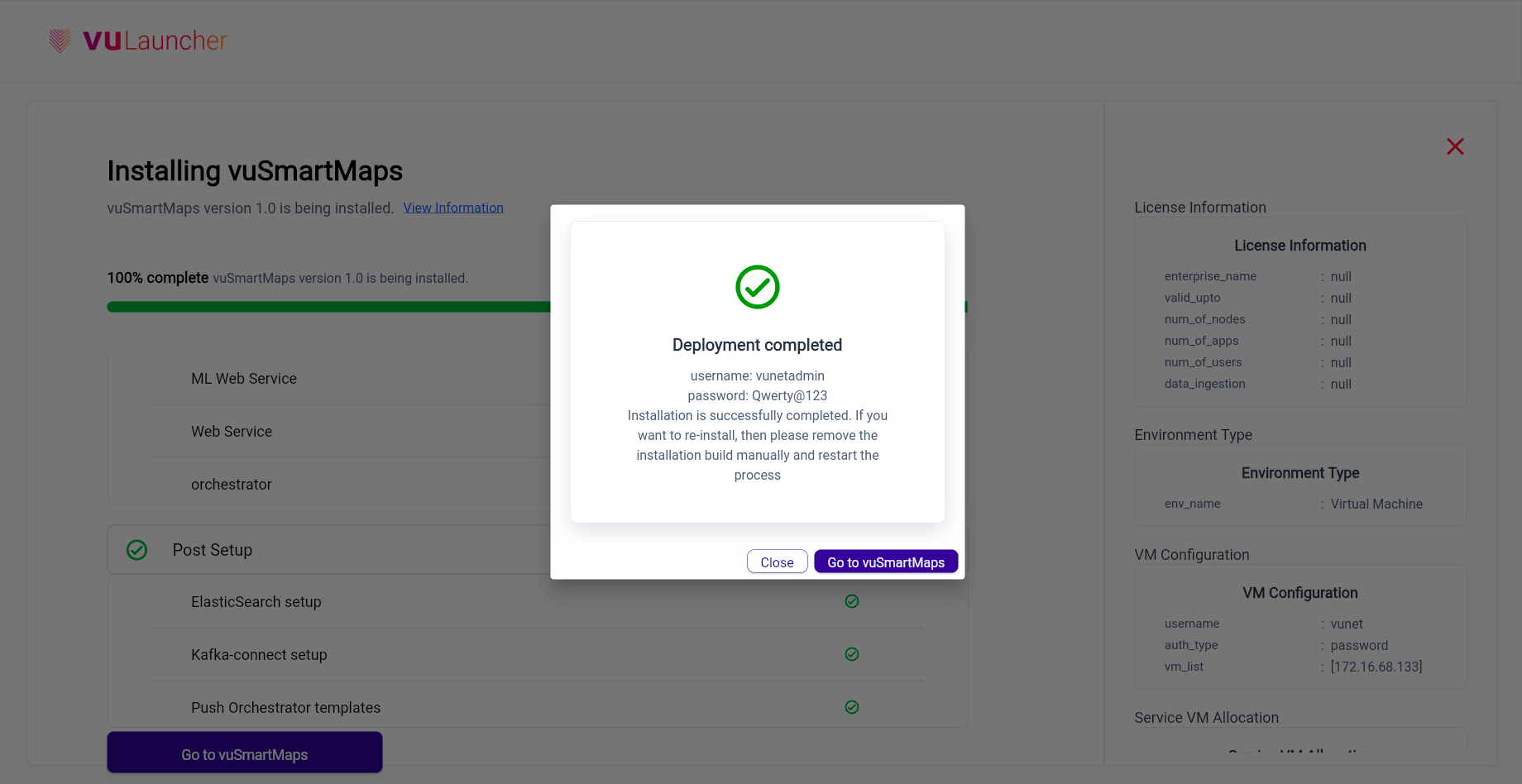

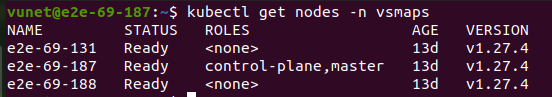

Follow the below steps in the master node, once the deployment is successful.

![]()

![]()

![]()

In the above output, the e2e-69-187 node is the master node, since the Role is assigned as Master.

echo unset KUBECONFIG >> ~/.bash_profilesudo chown -R vunet:vunet /etc/kubernetes/admin.conf![]()

![]()

Anticipate the following improvements in future releases:

![]()

![]()

![]()

Deployment On Existing Kubernetes Cluster

![]()

Browse through our resources to learn how you can accelerate digital transformation within your organisation.

VuNet’s Business-Centric Observability platform, vuSmartMaps™ seamlessly links IT performance to business metrics and business journey performance. It empowers SRE and IT Ops teams to improve service success rates and transaction response times, while simultaneously providing business teams with critical, real-time insights. This enables faster incident detection and response.