Deployment & Installation > On-Prem Deployment & Installation > Deployment on Existing Kubernetes Cluster

1. Getting Started with vuSmartMaps™

3. Console

5. Configuration

6. Data Management

9. Monitoring and Managing vuSmartMaps™

In the case of vuSmartMaps’ deployment in the existing kubernetes cluster, please follow below steps:

![]()

![]()

![]()

💡Note: Provide data1, data2 and data3 only if there is no data partitions available.

Provide user name/user group according to the environment.

![]()

![]()

![]()

![]()

💡Note: Please get the updated license files from [email protected].

Also, mention the kind of setup (single node/multi node) you’re doing for the deployment.

![]()

![]()

![]()

![]()

![]()

💡Note: Only YAML file should be uploaded here.

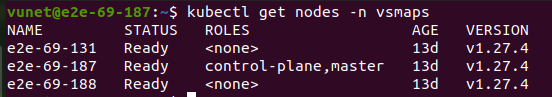

2. On clicking the continue button, vuLauncher will verify the access to the cluster and get the details of the nodes.

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

💡Note: Once you start the deployment, you cannot edit the configuration you provided.

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

Follow the below steps in the master node, once the deployment is successful.

![]()

![]()

![]()

In the above output, the e2e-69-187 node is the master node, since the Role is assigned as Master.

2. Run the following command to own the kube config file

sudo chown -R vunet:vunet /etc/kubernetes/admin.conf

![]()

Browse through our resources to learn how you can accelerate digital transformation within your organisation.

VuNet’s Business-Centric Observability platform, vuSmartMaps™ seamlessly links IT performance to business metrics and business journey performance. It empowers SRE and IT Ops teams to improve service success rates and transaction response times, while simultaneously providing business teams with critical, real-time insights. This enables faster incident detection and response.