ContextStreams

Collect, contextualize and cast your raw data into actionable real-time business intelligence.

ContextStreams is the domain-centric Observability pipeline in vuSmartMaps™ that allows you to ingest data at high speeds from a variety of sources, transform them by adding business context through dynamic data pipelines and push them out to external storage for building actionable business insights.

Why ContextStreams?

Collect

Ingest data at high speed from multiple data sources. You can leverage O11ySources - our preconfigured component blocks without worrying about data format or structure. (Examples: A VMWare Hypervisor O11ySource, AWS EKS Cluster O11ySource)

Contextualize

In our data pipelines you can transform, enrich and filter your data with our out-of-box plugins. More importantly, leverage our domain-centric adapters built to transform your logs, metrics and traces into intelligent insights.

Cast

Leverage our data store connectors to integrate the transformed data with destinations including VuNet’s HyperScale Data Store, TimescaleDB, Cloud or a downstream data store of your choice.

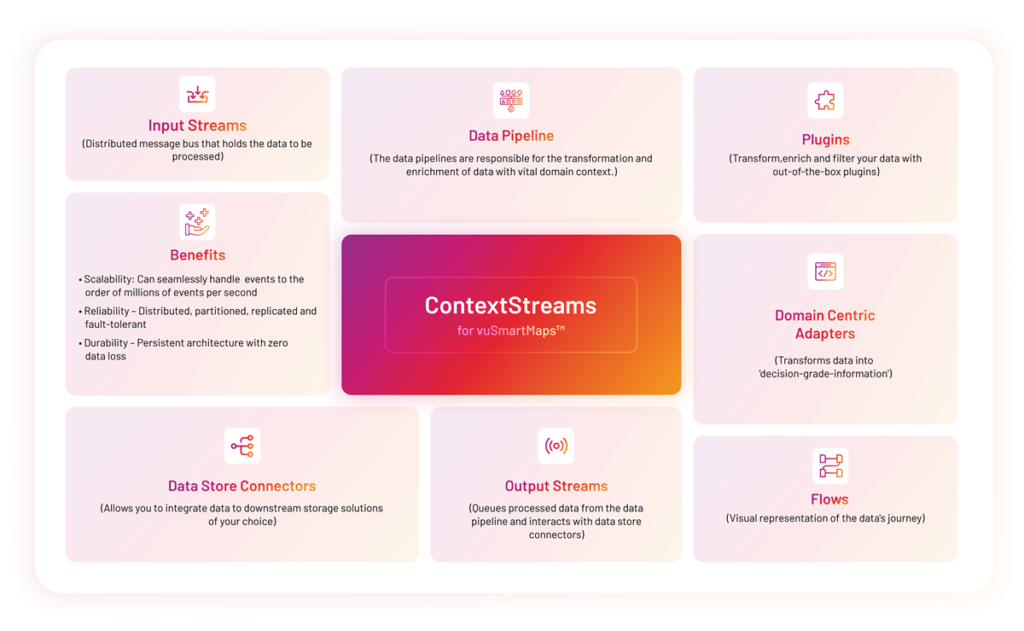

Fig: Interactive Diagram of ContextStream

Components of ContextStreams

It is a distributed message hub for the ingested data. The ingested data is processed in real-time via data pipelines.

The Data Pipeline serves as the backbone for data transformation within ContextStreams. The data from input streams and transformed with multiple plugins and domain-centric adapters.

● Plugins – These are out-of-the-box pre-built pipelines that would enrich, transform, correlate, filter, split your data and more. Our library of plugins ranges from regular data transformation operations like time conversion, field enrichments, arithmetic operations, and metrics aggregations to complex actions like micro-transactions stage tracking, and dynamic transaction ID-based correlation of events.

● Domain-Centric Adapters – Built on business, domain and environmental context, domain-centric adapters transform your data from a specific application to compliance operations to business functions (eg: adapters that understand payment transactions, transaction stages, payloads, error cases, and error codes). More importantly, ContextStreams comes with an arsenal of 25+ domain adapters for the Banking and Financial Services Industry.

The data pipeline offers multi-branching that allows you to construct sophisticated pipelines.

● Linear pipeline – A straightforward, sequential arrangement of plugins

● Branch pipeline – Offers the flexibility to generate multiple processed outputs from a single input data stream.

● Multi-input pipeline – Enables the integration of various inputs into a single pipeline, directing them to a unified output stream.

● Multi-output pipeline – Facilitates the entry of multiple inputs into the pipeline, capable of branching out into several outputs.

● Complex pipeline – A network of multiple blocks that branch and merge, intricately designed to execute specific business tasks.

Queues processed data from the data pipeline and interacts with data store connectors.

DataStore Connectors allows you to integrate data to VuNet’s HyperScale Data Store, Cloud, TimescaleDB or a downstream data store of your choice.

The Flows feature provides a comprehensive visual representation of the data’s journey within the ContextStreams.

Features

Seamlessly ingest data at scale in real-time, handling vast amounts at incredible speeds.

ContextStreams can handle events in the order of millions of events per second thanks to resources efficient multi-threading and horizontally scalable architecture.

Leverage the “Preview” feature to effortlessly verify and validate your streamed data. The data pipelines offer tailored and granular debug options for every stage — whether it’s a published pipeline, a draft, or a specific Block.

Persistent platform guaranteeing no data loss during ingestion and transformation, with data connectors, decoupled from the Data Pipeline to prevent data compromise due to endpoint failures.

Transform data through filtering, masking, mathematical operations, domain-related extraction, enrichment, and interpretation through pre-defined and custom plug-ins.

Achieve cost and storage efficiency by transforming logs into metrics.

Fig: Bento Diagram of ContextStream

Benefits

- Unlock business insights at lower costs – Leverage our domain-centric adapters and plugins to extract deep and meaningful business context from your Observability data. This enables a view into business KPIs and links business performance with IT metrics.

- Flexibility – Gain full autonomy over your data’s journey, from input to insight. Harness the three pillars of observability (logs, metrics, and traces) from your chosen sources and efficiently store the processed data on a cost-effective storage platform of your choice.

- Reliability – The platform is distributed, partitioned, replicated and fault-tolerant.

- Scalability – Seamlessly handle the streaming of millions of events per second, ensuring your infrastructure scales with your business needs.

- Durability – Persistent Architecture ensuring zero data loss.